AI Applications in Modern Infrastructure Management

Why AI Matters in Infrastructure: Context and Outline

Modern infrastructure—power grids, transport corridors, water systems, public facilities, industrial campuses—operates under relentless pressure: higher service expectations, tighter budgets, stricter safety rules, and climate volatility. Artificial intelligence helps reconcile these demands by turning data exhaust into operational foresight. The practical arc is simple but powerful: automate what is stable, predict what is fragile, and analyze what is uncertain. Think of it as a layered safety net where each thread strengthens the next, reducing downtime, trimming energy waste, and freeing experts to focus on higher‑value work.

Outline for this guide:

– Automation: control loops, orchestration across assets, human-in-the-loop safeguards, and resilience by design.

– Predictive Maintenance: sensing strategies, models for early failure detection, and realistic intervention playbooks.

– Data Analytics: pipelines from edge to core, KPIs that matter, and the habits that keep data trustworthy.

– Integration: reference architectures that align people, processes, and platforms without overcomplicating the stack.

– Governance and ROI: security, ethics, cost models, and a roadmap that scales from pilot to production.

Why now? Sensor costs have fallen, connectivity has broadened, and compute resources at the edge can process signals in near real time. At the same time, maintenance budgets face scrutiny and skilled technicians are stretched thin, making the case for prioritizing interventions rather than reacting to breakdowns. Studies across utilities and manufacturing repeatedly show that digital programs, when well governed, can cut unplanned downtime by double‑digit percentages and reduce energy consumption measurably. The challenge is not potential; it is disciplined execution. This article translates broad concepts into concrete steps, pragmatic metrics, and organizational patterns you can reuse.

Three north stars guide the recommendations:

– Reliability first: automation should fail safe and degrade gracefully.

– Transparency: models must explain enough to be trusted on the shop floor and in the control room.

– Value discipline: milestones tie to a business case, not to novelty.

Automation in the Field and Control Room

Automation is the backbone of AI-enabled infrastructure, coordinating thousands of micro‑decisions per minute that humans cannot reliably sustain. At the device layer, sensors feed programmable logic, which governs actuators through defined control rules. At the supervisory layer, orchestration systems coordinate setpoints, schedules, and alarms across multiple assets. Above this, policy engines enforce operating envelopes for safety, compliance, and energy efficiency. Together, these layers standardize behavior, cut manual variability, and create the stable baseline AI needs to reason effectively.

Practical patterns emerge across sectors. In a water network, automated valves manage pressure zones to prevent bursts, while pumps are sequenced to minimize peak tariffs. In a district energy plant, chillers and thermal storage are dispatched to flatten demand while keeping comfort within tight bounds. In rail operations, signaling automation spaces trains safely, smoothing throughput. Each example shares a theme: encode local physics and operational constraints first, then add optimization that balances cost, risk, and service level.

Quantifiable gains are common when automation is thoughtfully deployed:

– Reduced manual interventions by 20–40%, lowering error rates and overtime.

– Faster response to anomalies, shrinking minor disturbances before they cascade.

– Energy savings through continuous tuning, often in the 5–15% range for buildings and plants.

– More consistent quality and compliance reporting, easing audits and regulatory checks.

To sustain these results, design for resilience. Automation should fail closed or revert to conservative modes when data quality drops, network links fail, or sensors drift. Human‑in‑the‑loop escalation is vital for ambiguous states; operators must be able to override safely with clear traceability. Cybersecurity is integral, not bolted on: segment critical networks, enforce least‑privilege access, and monitor for anomalous traffic. Finally, document assumptions in plain language. When crews change shifts at 2 a.m., clarity is uptime’s quiet ally. And remember: automation is not set‑and‑forget; it is a living system that needs periodic tuning as equipment ages and demand patterns shift.

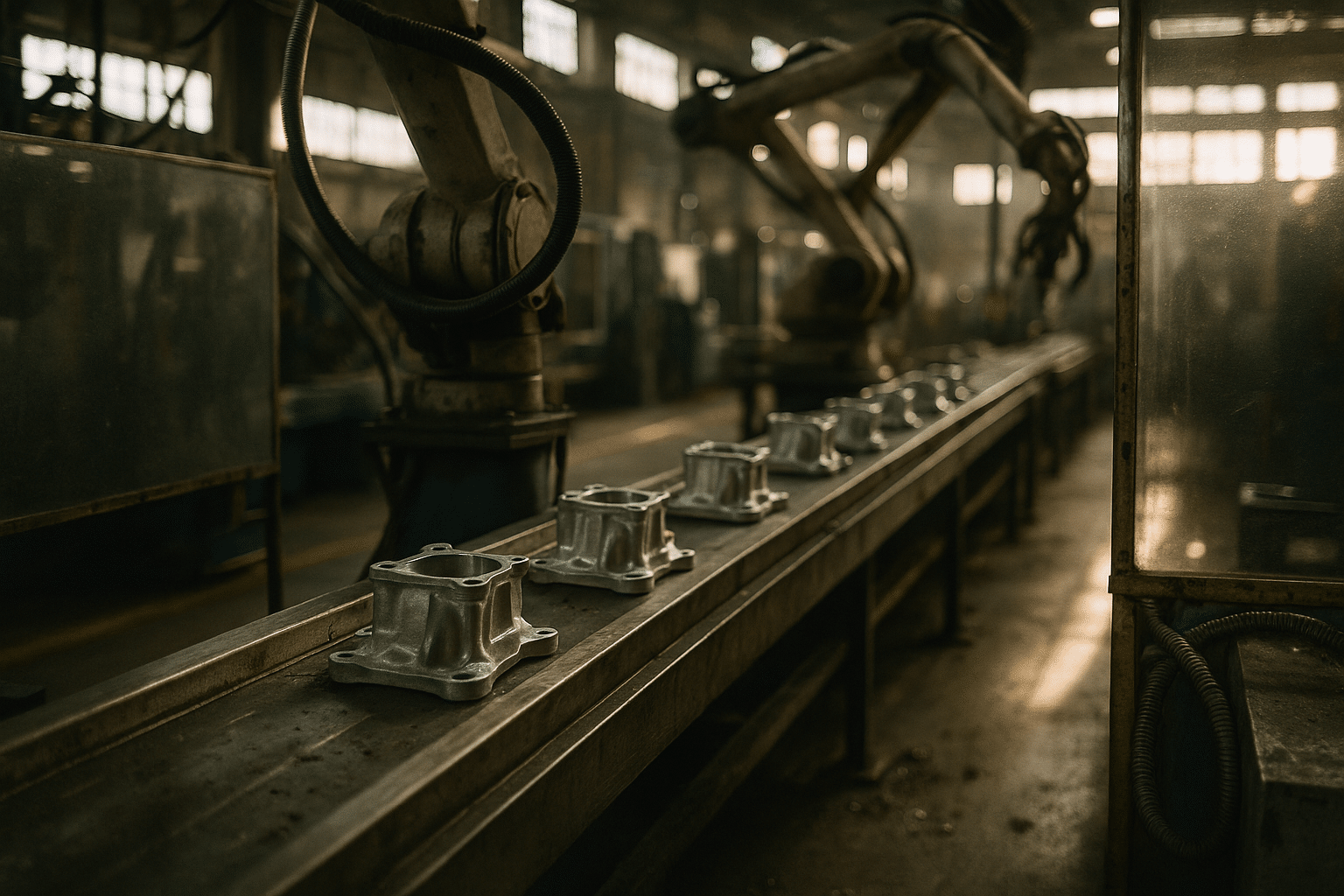

Predictive Maintenance: From Signals to Decisions

Predictive maintenance turns condition data into early warnings that convert costly surprises into scheduled tasks. The recipe blends sensing, signal processing, and modeling with a practical maintenance workflow. Common signals include vibration, temperature, electrical current, pressure, lubricant quality, acoustic emissions, and visual anomalies from cameras. Each modality has strengths: vibration flags bearing wear, thermal patterns reveal insulation and alignment issues, oil chemistry points to internal friction or contamination, and current signatures uncover motor defects.

The path from raw signal to decision typically follows four steps:

– Ingest and clean: synchronize data streams, filter outliers, and correct for sensor drift.

– Extract features: compute indicators such as spectral peaks, kurtosis, temperature deltas, or pressure variance.

– Model health: use rules, statistical baselines, or machine learning to estimate remaining useful life and failure probability.

– Orchestrate action: trigger inspections, order parts, and schedule downtime slots aligned with production or service windows.

Evidence from cross‑industry programs indicates that predictive approaches can reduce unplanned downtime by 30–50% and maintenance costs by 10–30%, while extending asset life by 20–40%. The exact numbers depend on asset criticality and data maturity, but the direction is consistent: catching faults earlier is cheaper than fixing them after collateral damage accumulates. Consider a mid‑sized wastewater facility where a single pump outage can spill into compliance penalties and emergency trucking. A modest sensor kit plus a trained model that detects cavitation weeks in advance can pay for itself after one avoided incident.

Two pitfalls are common and avoidable. First, data poverty: not enough failure examples to train complex models. Counter with hybrid methods that combine physics‑informed rules and anomaly detection, focusing on deviations from healthy baselines rather than attempting to classify every fault type. Second, alert fatigue: too many low‑value notifications erode trust. Mitigate by ranking alerts with consequence scoring—likelihood timed by impact—and by bundling related symptoms into a single case. Close the loop by labeling outcomes after inspections; continuous feedback improves precision over time.

Finally, embed predictive maintenance into the broader maintenance playbook. Tie recommendations to spare‑parts logistics, technician skill sets, and regulatory documentation. Publish clear service windows and communicate expected downtime early to affected stakeholders. The goal is not a perfect prediction; it is a timely, actionable plan that preserves safety, uptime, and budget.

Data Analytics: Architectures, Metrics, and Use Cases

Data analytics is the interpretive layer that lets automation and predictive maintenance prove their worth. The architecture begins at the edge, where gateways align timestamps and compress data, then continues through a secure transport into a central store or a federated mesh. A minimal pipeline includes acquisition, quality checks, contextualization (linking sensors to assets, locations, and processes), feature computation, model execution, and visualization. When decisions must be fast, inference runs at the edge with summarized results synchronized upstream for fleet‑level insight.

Good analytics depends on good data habits:

– Define a common asset model so that “Pump‑3” in one site means the same as “P‑003” in another.

– Track sensor lineage, calibration status, and expected ranges to detect drift early.

– Record units and sampling rates; resampling errors silently poison conclusions.

– Log model versions and thresholds; traceability is your audit trail when outcomes are reviewed.

Metrics that matter vary by domain but often include uptime, energy intensity, maintenance backlog, mean time between failures, mean time to repair, and spare‑parts turns. Composite indices help summarize performance for executives while allowing engineers to drill down. For example, a reliability score can weight critical assets more heavily, ensuring that wins in low‑impact areas do not mask risks in high‑consequence equipment. Visualization should tell a story: trend lines for degradation, histograms for alert precision, and scatter plots for load versus efficiency.

Representative use cases illustrate value. In a transit depot, analytics can schedule electric fleet charging to avoid demand spikes while ensuring routes start fully charged. In a campus of public buildings, heat maps can reveal zones that consistently miss temperature targets, pointing to ventilation faults or envelope leaks. In a wind corridor, cross‑site comparisons can isolate performance outliers, prompting blade inspections before yield drops spread. Across these scenarios, the playbook is similar: small pilots to validate signal strength, then scaled rollouts with standardized templates and training.

Beware common traps. Dashboards without decision rights turn into wall art; assign owners and clear triggers for action. Excessive centralization slows local problem‑solving; keep analytics close to operations while enforcing shared standards. And do not chase novelty at the expense of reliability—simple control charts and baseline models can outperform complex approaches when data is sparse or noisy.

Integration, Governance, and the Road Ahead

Bringing automation, predictive maintenance, and analytics together requires an integration blueprint that respects both physics and people. A pragmatic approach starts with a hybrid architecture: real‑time control and fast inference at the edge, fleet analytics and historical learning in a central environment, and a clear contract between the two. Interfaces should be open, documented, and versioned, allowing sensors and applications to evolve without breaking everything upstream.

Governance keeps momentum sustainable:

– Security by design: segment networks, rotate credentials, and monitor behavior rather than relying solely on perimeter defenses.

– Data stewardship: assign owners for key datasets, with quality checks automated and exceptions visible.

– Model lifecycle: document training data, explainability notes, and acceptance criteria; retire models that no longer meet standards.

– Ethics and compliance: codify rules for privacy, worker safety, and environmental obligations.

Value realization is about disciplined sequencing. Tie each milestone to a specific cost or risk lever—downtime hours eliminated, penalties avoided, energy saved, or service levels improved. Pilot with high‑impact, narrow scope assets where access is straightforward and outcomes can be measured within a quarter. Scale through templates, not one‑off projects: reusable data schemas, alert playbooks, and commissioning checklists. Budget for change management—technicians need training, and operators need to trust that automation supports rather than replaces their judgment.

Looking forward, three trends deserve attention. First, contextual AI that blends text, time series, and diagrams will make root‑cause analysis faster by linking logs to sensor traces and procedures. Second, simulation‑in‑the‑loop will allow teams to test control strategies against realistic disturbances before touching live systems. Third, edge hardware continues to shrink power draw, enabling analytics in places where cabling is scarce and maintenance visits are infrequent.

Conclusion and next steps for practitioners:

– Baseline current reliability, energy, and maintenance performance; pick one measurable target.

– Instrument critical assets with minimal viable sensing; verify data quality before modeling.

– Automate stable routines with clear fail‑safes; keep human overrides simple and auditable.

– Introduce predictive maintenance on a few high‑value failure modes; measure alert precision and intervention outcomes.

– Institutionalize data stewardship and model lifecycle practices as you scale.

Leaders who treat AI as a disciplined craft—not a spectacle—gain infrastructure that is steadier under stress, clearer in its signals, and kinder to budgets. Start small, learn fast, and let results pull the next investment.