Exploring MES AI Solutions for Manufacturing Efficiency

Outline:

– Why MES matters now: context, value, and measurable outcomes

– From automation islands to orchestrated lines: bridging PLCs, robots, and sensors

– AI inside MES: predictive maintenance, quality intelligence, scheduling optimization

– Data foundations: models, standards, governance, and security

– Roadmap and ROI: phasing, KPIs, and change management for durable gains

Introduction:

Manufacturing has always been a choreography of materials, methods, and timing. What’s different today is the intelligence embedded in that dance—software that perceives, decides, and helps teams respond faster than a whiteboard ever could. Manufacturing Execution Systems (MES) sit at the center of this shift, translating plans into actions, tracking every step, and feeding back the evidence that leaders need to improve. Add automation and artificial intelligence, and MES becomes not just a digital clipboard, but a real-time conductor for throughput, quality, and cost.

The relevance is practical: many plants still juggle spreadsheets, handwritten notes, and siloed machine screens. That patchwork limits visibility, slows decisions, and hides the causes of scrap or downtime. When MES, automation, and AI align, teams gain traceability across the order, machine, and material journey; anomalies surface before they turn into defects; schedules adapt to constraints; and continuous improvement is grounded in data, not guesswork.

MES in Context: The Digital Spine of Modern Manufacturing

Think of a factory as a living system: raw materials in, finished goods out, and countless micro-decisions in between. MES—Manufacturing Execution Systems—form the digital spine that keeps this system upright and coordinated. They translate the “what” from planning into the “how” on the shop floor, guiding operations, collecting evidence, and reconciling outcomes with targets. In practical terms, MES manages work orders, enforces process steps, ensures the correct materials and tools are used, and records what actually happened at each station. This record is the foundation for traceability, audit readiness, and continuous improvement.

Why does this matter now? Volatility. Order mixes change daily, supply variability is common, and labor availability can fluctuate. Without real-time execution control, plants chase problems rather than prevent them. Industry benchmarks frequently report that disciplined MES adoption correlates with improvements such as 5–15% higher overall equipment effectiveness (OEE), 10–25% faster response to disruptions, and measurable reductions in scrap and rework. These ranges depend on baseline maturity, but the pattern is consistent: when execution becomes observable and enforceable, variation shrinks and predictability grows.

MES capabilities often include:

– Work order dispatching and electronic work instructions

– Recipe or route enforcement with version control

– Electronic batch records and genealogy/traceability

– Data collection from machines and manual inputs

– Nonconformance management, e-signatures, and audit trails

Consider a discrete assembly line that previously relied on paper travelers. Changeovers took longer than planned because operators fetched missing tools and parts, while quality checks were inconsistently documented. After introducing MES with point-of-use instructions and component verification, the line stabilized. Changeover time fell by a double-digit percentage, first-pass yield rose modestly but steadily, and supervisors could finally see bottlenecks in real time. No silver bullets were involved—just clear rules executed consistently, and data to prove it. From there, layering analytics and automation became much simpler because the process was visible, standardized, and measurable.

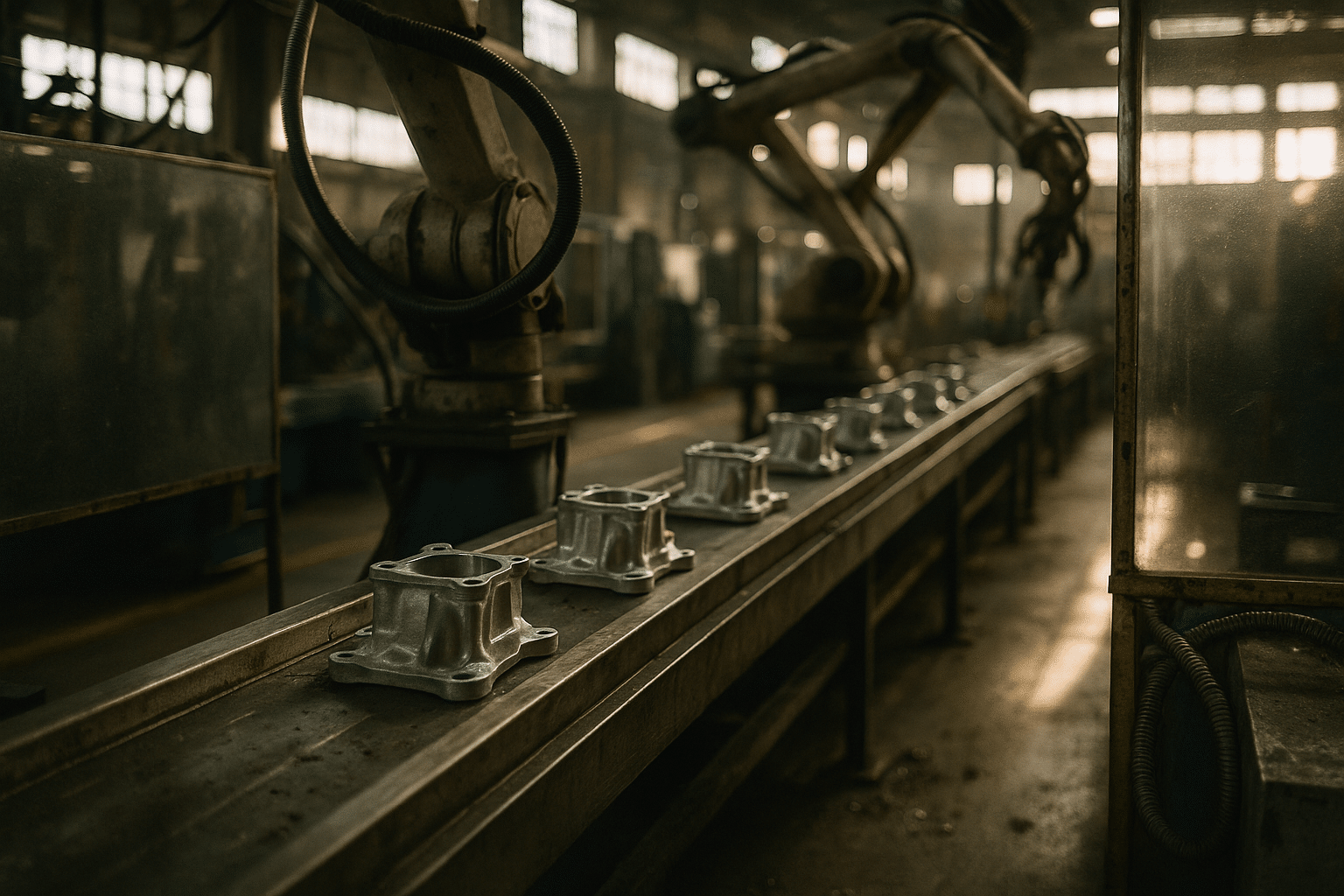

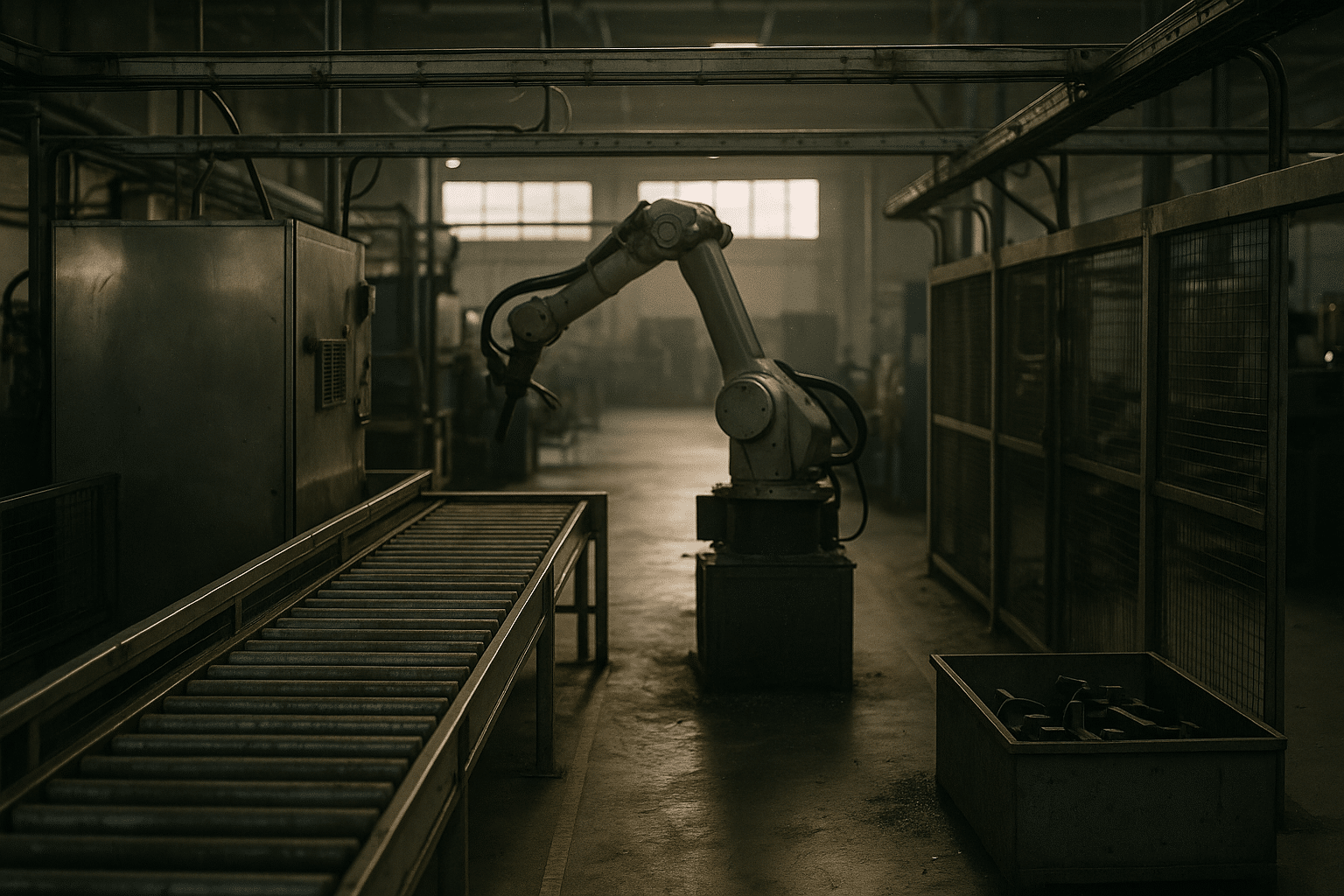

Automation Orchestration: From Islands of Equipment to a Cohesive Flow

Automation delivers speed and repeatability, but in many facilities it grows organically—one cell here, one robot there—until you have islands of capability with choppy handoffs. MES acts as the orchestrator that turns those islands into a chain. It aligns programmable logic controllers (PLCs), robots, conveyors, testers, and vision systems with the production plan, while keeping humans in the loop for exceptions. The goal is not to automate for its own sake, but to align automated actions with quality, safety, and takt time constraints.

Common automation assets you’ll see under MES coordination include:

– PLC-controlled stations handling motion, interlocks, and safety

– Vision systems verifying orientation, presence, or surface features

– Test stands capturing parametric data at the unit or batch level

– Material handling devices routing parts across cells

– Andon-like signaling for escalation and rapid response

When equipment is orchestrated, the system can prevent missteps before they occur. For example, if an upstream tester flags marginal results, MES can divert the unit for rework automatically, update genealogy records, and notify a quality technician. If a robotic cell reports a minor fault, MES can trigger a micro-stop protocol and present a short diagnostic checklist to the operator. Compared to manual coordination, this reduces the time equipment spends waiting for instructions and keeps WIP from backing up in the wrong place. Plants often observe smoother flow, shorter queues between cells, and a clearer separation of chronic versus sporadic downtime once orchestration is in place.

Two integration patterns dominate. First, direct machine connectivity via standard protocols allows MES to read and write signals that gate work, collect measurements, and validate states. Second, historian-first designs centralize time-series data for analysis while MES consumes structured events, such as step completions and alarms. Each approach can be effective. Direct connectivity favors immediate control and enforcement; historian-centric architectures make broader analytics easier across many assets. The right choice depends on latency needs, network reliability, and the team’s data engineering capacity. Either way, the guiding principle is simple: fewer blind spots, faster feedback, and safer, more consistent execution.

AI Inside MES: Predict, Optimize, and Assure Quality

Artificial intelligence amplifies MES by turning raw shop-floor signals into timely decisions. While traditional statistical process control and rules remain useful, AI can spot non-obvious patterns and adapt to changing conditions without manually tweaking thresholds every week. Three families of use cases stand out. First, predictive maintenance models estimate failure risk from vibration, temperature, and cycle profiles, reducing surprise breakdowns. Second, computer vision and sensor fusion identify defects earlier, sometimes before they appear to the naked eye. Third, scheduling and dispatch intelligence weighs constraints—skills, setup times, material availability—to recommend sequences that reduce changeovers and idle time.

Results vary, yet many organizations report sustainable gains when models graduate from pilots to daily routines. Predictive maintenance can cut unplanned downtime by double-digit percentages, especially on cyclical equipment where failure modes are repetitive. Quality models often reduce false scrap by flagging borderline conditions for targeted checks rather than blanket rework. AI-enhanced schedulers, even with simple heuristics plus machine learning forecasts, tend to raise schedule adherence and throughput in high-mix environments. The common thread is decision support: the model suggests; humans confirm; MES executes and records the outcome for continued learning.

Comparisons help clarify where AI fits:

– Rules and SPC: simple, transparent, low maintenance; limited when signal patterns drift

– Supervised ML: strong detection accuracy with labeled data; requires ongoing monitoring

– Unsupervised anomaly detection: faster to start with; may need tuning to reduce false alarms

– Optimization and reinforcement methods: powerful under complex constraints; demand reliable simulations and guardrails

Where should the models live—edge or cloud? Edge deployment near machines minimizes latency for interventions such as reject gates or robotic adjustments, and it keeps sensitive data on-site. Cloud or data center environments simplify training on longer histories and fleetwide benchmarking. A hybrid pattern is common: train centrally, deploy at the edge, and sync model versions through MES change control. Finally, treat AI like any production asset. Use model versioning, drift metrics, and clear rollback procedures. Establish KPIs such as mean time between failures, first-pass yield, false-reject rate, and on-time completion, then attribute changes to model-enabled decisions. AI shines when it is accountable, measured, and integrated—not when it’s a science experiment off to the side.

Data Foundations and Interoperability: Standards, Quality, and Governance

AI and automation only pay off if the data is trustworthy and organized. A strong data foundation turns the factory’s many dialects into a shared language. Reference models such as ISA-95 clarify where enterprise planning ends and shop-floor execution begins, while ISA-88 provides structure for recipes and batch logic. For machine connectivity, widely adopted protocols enable consistent, well-typed metadata and secure sessions. Lightweight publish/subscribe messaging is helpful for streaming states and measurements from many devices without overwhelming the network.

Good architecture balances three needs: real-time enforcement, historical analysis, and cross-site comparability. Real-time paths carry signals used by MES to validate steps, interlock operations, and log critical parameters. Historical stores hold granular time-series data for reliability and quality studies. Harmonized master data—part numbers, routes, bill-of-process, test limits—ensures analytics compare apples to apples across lines and plants. In practice, a semantic layer maps equipment tags to business concepts so that “station_12_temp” becomes “solder_heater_temp,” with units and limits defined once and reused everywhere.

Data quality deserves day-to-day attention:

– Completeness: are all required fields present at each step?

– Accuracy: do sensors drift, and are calibrations recorded?

– Timeliness: are timestamps synchronized and sequences reliable?

– Lineage: can you trace every metric to its origin and transformation?

Governance ties it together. Role-based access protects sensitive product data, while audit trails record who changed what and when—a must for regulated industries. Cybersecurity frameworks tailored for industrial control systems help segment networks, harden interfaces, and monitor anomalies without disrupting production. For AI, maintain a feature catalog with documented definitions and validation steps, and keep training datasets under change control. When engineering, quality, and IT align on standards, onboarding a new line or supplier stops being a months-long custom project and becomes routine. That repeatability is the quiet multiplier behind faster scale-up, cleaner compliance, and a pipeline of AI models that can be deployed across sites with minimal rework.

Roadmap, ROI, and Change Management: Scaling What Works

Transformations stall when they chase technology without anchoring it to outcomes. A practical roadmap starts with a value case, a narrow scope, and the discipline to measure. Choose a pilot line where pain is concrete—excess changeover time, low first-pass yield, or chronic micro-stops. Define baseline KPIs, implement a focused MES capability (for example, electronic work instructions and data collection at critical stations), integrate with a handful of machines, and add one AI use case that reduces a visible loss. The pilot’s goal is not to impress in a demo, but to survive real shifts and prove gains over several weeks.

From there, scale along three axes:

– Function: extend to nonconformance management, genealogy, recipe control

– Footprint: replicate across similar lines, then adapt for variants

– Intelligence: add predictive maintenance, quality scoring, and smarter scheduling

ROI modeling should be transparent. Quantify benefits in reduced scrap, recovered uptime, shorter cycle time, lower premium freight, and deferred capital through higher OEE. On the cost side, include software licenses or subscriptions, integration effort, sensors and edge hardware, change management, training time, and ongoing support. Many programs target payback in 12–24 months for initial waves, with cumulative improvements compounding as capabilities expand. Use simple cash-flow calculators, attribute improvements conservatively, and include sensitivity analyses to reflect uncertainty.

People make it stick. Engage operators early, gather feedback on usability, and avoid burying frontline staff in pop-ups and data entry. Short, scenario-based training beats long lectures. Standardize escalation paths so that when AI flags an anomaly, teams know who decides and how to proceed. Create a governance cadence—monthly KPI reviews, quarterly model audits, and annual architecture checks—to keep the system healthy. Finally, communicate wins with context: not just that OEE rose, but why, and how that freed capacity for new products or reduced overtime. When outcomes are visible and repeatable, momentum builds. The result is a factory that behaves less like a collection of isolated machines and more like a resilient, learning system—steady under pressure, quick to adapt, and clear about what “good” looks like every hour of the day.