Exploring the Impact of Generative AI on Industries

Outline: How Machine Learning, Neural Networks, and AI Models Power Generative Change

Generative AI has shifted from lab demos to boardroom priorities, but the machinery beneath the magic is often overlooked. To navigate investment, build reliable systems, and set realistic expectations, it helps to separate the layers: machine learning provides the learning paradigm, neural networks are the computational canvas, and AI models package capabilities for real tasks. This outline sets the roadmap you will follow through the article, and it anchors each concept to decisions practitioners make every day, from data strategy to deployment risks. Along the way, we will link technical choices to business outcomes and highlight trade-offs that determine whether a pilot quietly fades or scales into production value.

What this article covers, and how sections connect:

– Section 1 (you are here): An orientation that frames the topic and lists what to expect.

– Section 2: Machine learning foundations, from supervision types to evaluation, with an eye on how generative systems learn and generalize.

– Section 3: Neural network architectures and training dynamics, comparing attention-based models, recurrent designs, and diffusion approaches.

– Section 4: AI models in practice across industries, including use cases, measurable value, risks, and governance patterns.

– Section 5: A conclusion with an actionable roadmap, focusing on practical steps for leaders, product teams, and researchers.

Why this structure matters: generative systems rely heavily on self-supervised learning and representation quality, so poor data pipelines can cripple even advanced models. Architecture choices influence latency, memory, and controllability, which in turn shape user experience and operating costs. Deployment adds its own constraints, including privacy, compliance, and reliability under drift. By making these dependencies explicit, you can match methods to goals and avoid common traps such as training on narrow distributions, overfitting to benchmarks, or underinvesting in evaluation. Think of the journey ahead as moving from map to compass to road: fundamentals draw the map, architectures provide the compass, and models-in-production are the road where rubber meets reality. With that, let’s step into the foundations that make generative systems more than clever demos.

Machine Learning Foundations Powering Generative Systems

Machine learning is the study of algorithms that improve through data. In practice, three families dominate: supervised learning (learning from labeled examples), unsupervised and self-supervised learning (discovering structure or predicting parts of data from other parts), and reinforcement learning (optimizing decision policies from feedback). Generative systems lean heavily on self-supervised strategies, because predicting missing tokens in text, pixels in images, or frames in video scales well without expensive labeling. The goal is representation learning: compressing high-dimensional inputs into useful abstractions that transfer across tasks.

Data quality is the keystone. Curating diverse, deduplicated, and well-governed datasets reduces overfitting and improves generalization. Sampling bias, long-tail phenomena, and domain drift can degrade model behavior when products meet real users. Practical pipelines often include filtering, deduplication, stratified sampling, and synthesis of rare cases. For generative applications, guardrail datasets—collections of prompts and edge cases—help constrain outputs and reduce unwanted content. Evaluation moves beyond accuracy into metrics that reflect user value: perplexity for language modeling, Fréchet distance variants for images, coverage and diversity for outputs, and human-in-the-loop ratings for usefulness and safety.

Why this matters to industry teams:

– Reliability depends on distribution coverage. A model that shines on common patterns may falter on niche but critical cases.

– Cost scales with data and compute. Efficient sampling, curriculum schedules, and smaller distilled models can preserve quality while reducing spend.

– Compliance starts with data lineage. Clear records of sources, consent, and transformations make audits smoother and reduce legal risk.

– Iteration speed wins. Automated evaluation suites and well-instrumented feedback loops shorten cycles from idea to improvement.

It is tempting to equate model size with capability, but returns vary by domain and data. Many teams find that targeted fine-tuning on domain corpora outperforms raw scaling. Similarly, retrieval-augmented generation injects up-to-date or proprietary context at inference time, improving factuality without retraining. The lesson is simple: match the learning setup to the problem’s structure. For forecasting demand, supervised regression with hierarchical features may beat a large generative system. For creative design or multi-turn assistance, self-supervised generative models can provide flexible, controllable outputs when paired with careful evaluation and guardrails. Foundations are not glamorous, yet they determine whether later layers thrive or struggle.

Neural Networks: Architectures, Training Dynamics, and Generative Methods

Neural networks approximate functions by stacking linear transformations with nonlinear activations, training parameters via gradient descent and backpropagation. Different architectures encode different inductive biases. Convolutional networks capture locality and translation invariance, making them efficient for images. Recurrent designs process sequences step by step, modeling temporal dependencies. Attention-based architectures learn to focus on relevant tokens anywhere in a sequence, enabling parallel training and strong long-range reasoning. Each choice carries trade-offs in compute, memory, latency, and data efficiency.

Generative modeling uses several families. Autoregressive models produce outputs token by token, conditioned on the past, offering fine-grained control over likelihoods and sampling. Variational autoencoders learn latent spaces that enable interpolation and structured edits. Diffusion models reverse a noise process to synthesize detailed samples with robust mode coverage. For text, attention-based autoregressive models are popular due to their flexible conditioning and scalable training. For images and 3D assets, diffusion methods excel at high-fidelity synthesis and controllable guidance through prompts or conditions.

Training dynamics matter as much as architecture. Learning rate schedules, normalization choices, initialization, and batch sizes all influence stability and convergence. Overfitting shows up as fluent yet brittle behavior; regularization, data augmentation, and early stopping help. Tokenization can bottleneck both performance and controllability for text-based systems, while resolution and augmentation policies shape outcomes in vision. Safety, too, is partly architectural: a model’s capacity to refuse unsafe requests or respect constraints benefits from both training data curation and auxiliary objectives that shape behavior.

Key trade-offs leaders should weigh:

– Speed vs. quality: Larger context windows and guidance steps improve output quality but raise latency and cost.

– Memory vs. scale: Wider layers and deeper stacks increase capacity yet strain hardware budgets; sparsity and quantization can help.

– Control vs. creativity: Strong constraints and retrieval improve factuality but may reduce novelty; hybrid strategies balance both.

– Generality vs. specialization: A single broad model simplifies tooling, while small domain models can outperform on narrow tasks.

Finally, evaluation must reflect end goals. Perplexity or loss reductions are useful signals but do not guarantee factuality, safety, or user satisfaction. Human preference data, rubric-based scoring, and scenario tests (e.g., adversarial prompts, long-context reasoning, or rare failure modes) round out measurement. When teams make these dynamics explicit, they can pick architectures that fit workloads rather than chasing trends, guiding generative systems from clever outputs toward dependable tools.

AI Models Across Industries: Use Cases, Value, Risks, and Governance

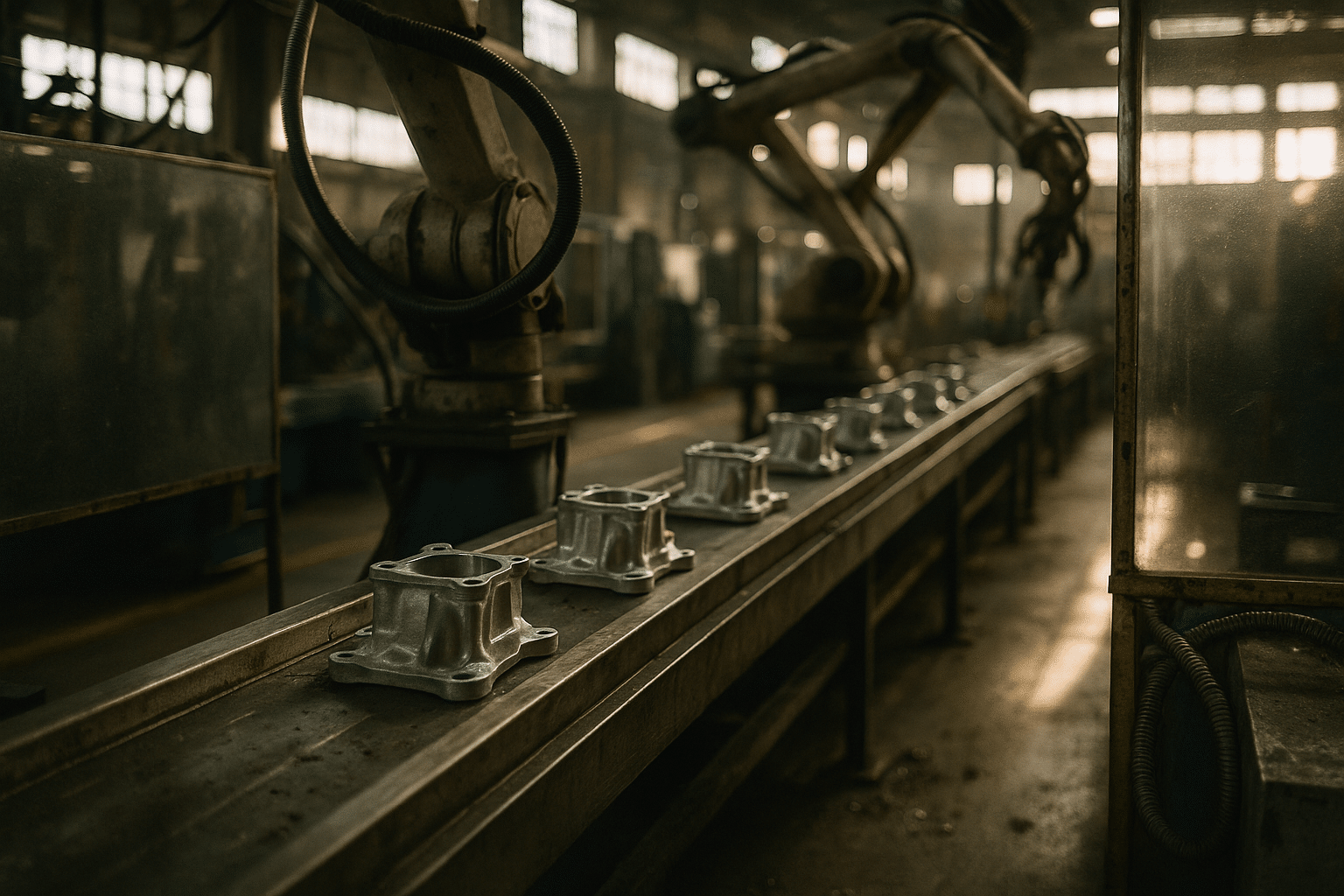

Generative AI already touches many workflows, often as a copilot that drafts, translates, summarizes, designs, or proposes alternatives. In product development, generative design explores shapes that meet constraints like strength-to-weight ratios or thermal limits, then suggests manufacturable options. In customer operations, assistants triage requests, retrieve policy snippets, and propose responses tailored to context. In marketing, content variants align to tone or channel while preserving brand guidelines. In finance and services, models analyze narratives in reports to surface signals alongside quantitative metrics. Each example pairs generation with retrieval, constraints, and human oversight; the synergy matters more than any single model.

Evidence of value varies by role, but patterns recur. Teams report cycle-time reductions for drafting and review, higher test coverage in software workflows, and faster exploration in design sprints. Quality improvements often come from consistency: the same policy applied uniformly, the same rubric for code, or a standardized structure for briefs. Yet value depends on measurement. Leaders who define success metrics—turnaround time, defect rates, compliance flags, or satisfaction—see clearer gains and quicker iteration.

Risks track the same axis as value: scale. Synthetic content amplifies both insight and error. Without retrieval or verification, models may fabricate details. With sensitive data, privacy and access control become paramount. Intellectual property concerns call for data provenance, usage policies, and content tracing. Energy use and efficiency also matter; smaller distilled models or caching can reduce footprint without compromising outcomes.

Governance translates intent into practice:

– Data management: Document sources, consent, filtering policies, and retention to support audits.

– Model evaluation: Track usefulness, safety, and bias with scenario sets and human review, not just aggregate metrics.

– Deployment controls: Use role-based access, rate limits, logging, and red teaming to spot abuse and drift.

– Change management: Communicate scope, train users, and gather feedback loops before expanding access.

Taken together, these practices produce repeatable wins. Generative systems thrive as part of a workflow, grounded in accurate context and judged by outcomes that matter to customers or stakeholders. The shift is from one-off demos to dependable capability, embedded where it shortens queues, reduces rework, or widens exploration.

Conclusion and Actionable Roadmap for Teams Adopting Generative AI

If you are a leader or practitioner weighing adoption, start with clarity: define one or two high-friction tasks and the metrics that express success. Build a thin slice that connects data, model, and user touchpoint, then measure deltas in speed, quality, and risk. Expand only after evaluation stabilizes. The goal is a compounding loop—collect feedback, improve prompts or fine-tuning, tighten retrieval, and prune failure modes—rather than a single moonshot that is hard to maintain.

A pragmatic roadmap:

– Week 0–2: Problem framing, baseline metrics, and data inventory; list constraints and red lines.

– Week 3–6: Prototype with retrieval and guardrails; assemble evaluation sets that reflect real tasks and edge cases.

– Week 7–10: Pilot with a small cohort; add telemetry, feedback forms, and escalation paths; quantify value and risks.

– Week 11+: Harden for production with access control, monitoring, and incident playbooks; plan periodic audits.

Technical guidance: prefer retrieval-augmented generation for knowledge-heavy tasks, and consider smaller specialized models where latency or cost is tight. Use structured prompts and schema-constrained generation when outputs must fit downstream systems. Automate evaluation with both quantitative signals and targeted human review. Where appropriate, explore fine-tuning on domain data to raise accuracy and reduce prompt complexity.

Organizational guidance: success usually hinges on workflows, not only models. Assign ownership for data governance, define review roles, and align incentives so teams benefit from using the system. Communicate limitations plainly, encourage users to flag odd outputs, and channel that feedback into the improvement loop. Treat this as a product, not a project; iteration, measurement, and maintenance are the engine.

In closing, generative AI is not a silver bullet, but it is a capable toolset when anchored in sound machine learning practices, thoughtful neural architectures, and well-governed models. Use it where it compresses time-to-value, pairs with human judgment, and fits your risk profile. Do that, and you will move beyond headlines to dependable results—systems that make work clearer, faster, and a little more creative.