The Role of Artificial Intelligence in Modern Industries

Outline:

1) Machine Learning: Foundations, Methods, and Real-World Value

2) Neural Networks: Architectures and Intuition

3) Deep Learning: Scaling Data, Compute, and Representation

4) Comparing Approaches: When to Use ML, Neural Networks, or Deep Learning

5) Conclusion: Practical Roadmap for Teams Adopting AI

Machine Learning: Foundations, Methods, and Real-World Value

Machine learning has become a dependable engine for turning data into predictions and insights across logistics, healthcare, finance, manufacturing, and energy. At its core, it learns patterns from historical examples and uses them to make decisions without being explicitly programmed for every rule. Three families of methods anchor the field: supervised learning (predict a labeled outcome), unsupervised learning (discover structure in unlabeled data), and reinforcement learning (learn actions through trial, feedback, and cumulative reward). Each family supports a wide spectrum of practical tasks, from forecasting demand to flagging faults in sensor streams.

A typical workflow looks like a measured relay race: define the problem and success metric, audit and prepare data, train candidate models, evaluate with holdout sets, and monitor performance after deployment. Common metrics include accuracy, precision, recall, F1 score, area under the curve for classification, and mean absolute or squared error for regression. The quality of input data often matters as much as the choice of algorithm. Missing values, concept drift, data leakage, and bias can quietly erode outcomes unless they are deliberately addressed with validation routines and fairness checks.

It helps to think of tool categories rather than single algorithms:

– Linear and logistic models for fast, interpretable baselines.

– Tree-based ensembles for handling nonlinearity and mixed data types.

– Distance and kernel methods for flexible decision boundaries.

– Probabilistic models to quantify uncertainty.

– Clustering and dimensionality reduction to reveal latent structure.

Starting with a simple baseline offers a sanity check; more complex methods should clear that bar with meaningful gains to justify added complexity.

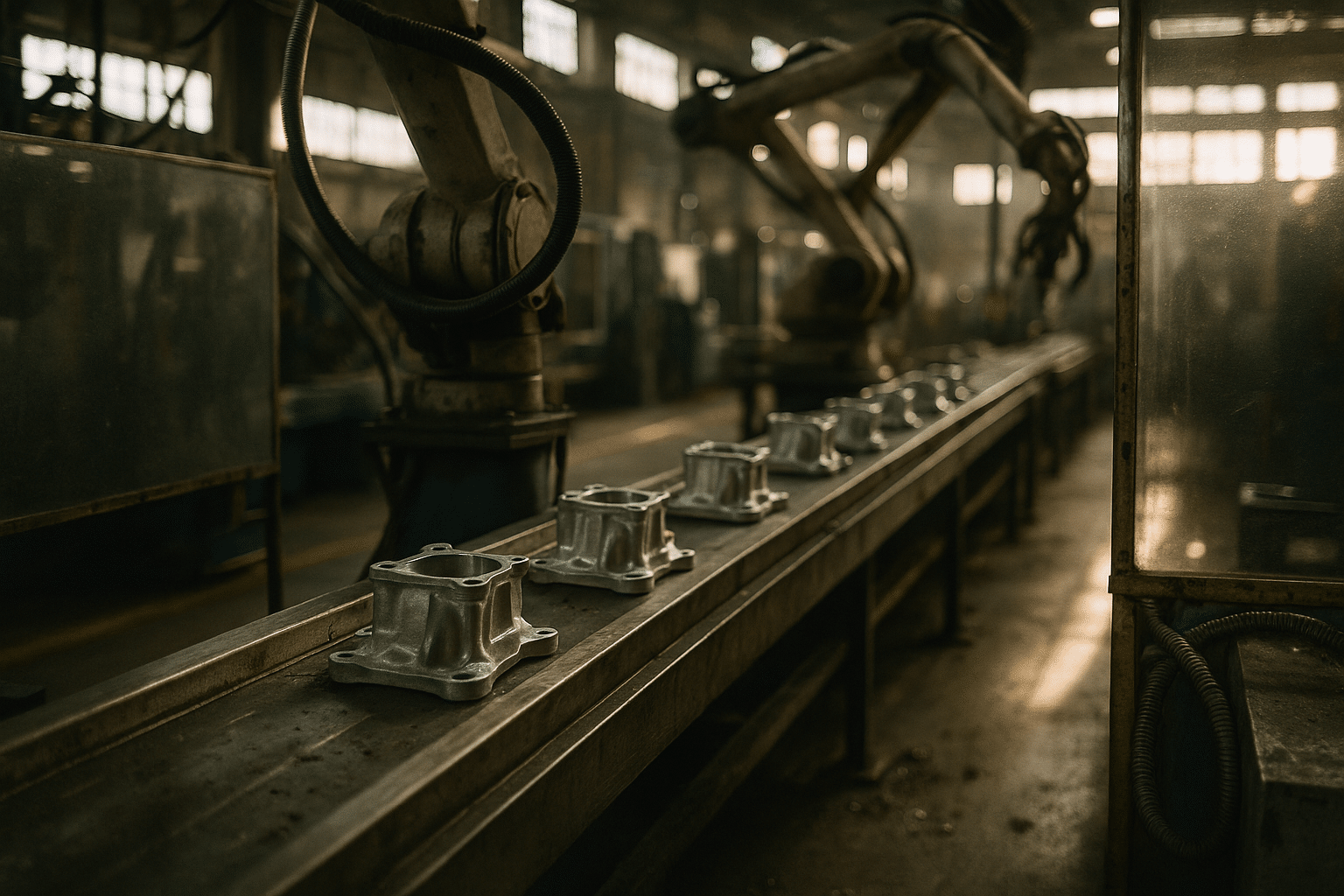

Concrete industry examples illustrate the payoff. In supply chains, supervised models align inventory to demand, reducing stockouts and overstock by identifying seasonality and regional patterns. In predictive maintenance, anomalous vibration signatures trigger inspections before failure, lowering unplanned downtime. In healthcare operations, triage models prioritize attention while leaving final decisions to clinicians, improving throughput and consistency. Risk teams assess probability of default using features like payment history, utilization patterns, and macro indicators, then apply human review for edge cases. Results vary by context, but published case studies frequently report material reductions in error rates and operational costs when models are well-governed and continuously updated.

Responsible practice underpins long-term value. Teams benefit from data documentation, versioned experiments, reproducible pipelines, and monitoring for drift. Human-in-the-loop validation anchors high-stakes decisions. Privacy is protected with principled access controls and aggregation methods. Finally, communicating uncertainty—confidence intervals, calibration plots, and scenario ranges—helps stakeholders make informed choices rather than relying on single-point predictions.

Neural Networks: Architectures and Intuition

Neural networks approximate functions by stacking layers of simple units, each performing a weighted sum followed by a nonlinearity. The magic lies not in any single neuron but in depth and composition: layers learn progressively more abstract features, from edges to shapes to concepts. Training proceeds via backpropagation, which computes gradients of an error signal with respect to every weight and nudges the model to reduce that error. Activations such as ReLU, GELU, or sigmoid shape the flow of information and gradients; initialization and normalization stabilize learning as depth grows. The result is a flexible model family capable of capturing complex relationships across images, audio, language, tabular data, and time series.

Architectural motifs reflect problem structure. Convolutional networks share weights across spatial neighborhoods, exploiting translation patterns and drastically reducing parameters for image and signal tasks. Recurrent and gated variants process sequences by retaining a memory of prior steps, useful for forecasting, language modeling, and event streams. Attention mechanisms focus computation on the most relevant parts of the input, enabling long-range dependencies and efficient parallelism in modern sequence models. Residual connections, skip links, and normalization strategies counter vanishing gradients and accelerate training, making deeper stacks viable. Each design choice trades off capacity, compute, and data requirements.

Key building blocks that practitioners revisit often include:

– Embeddings that turn categorical or token inputs into dense vectors where semantic relationships emerge.

– Normalization layers that stabilize activations across batches or features.

– Dropout and stochastic depth that reduce overfitting by introducing noise during training.

– Positional encodings and temporal features that ground models in order and rhythm.

– Multihead attention that learns complementary views of the same input.

Together, these components support a modular design kit, where swapping parts tailors the network to the data.

Interpreting neural networks remains an active area. Feature attributions estimate which inputs influence outputs; saliency maps highlight informative regions; surrogate models approximate complex functions locally for explanation. While these tools do not fully open the black box, they provide helpful signals for debugging, fairness assessments, and trust. In practice, teams balance interpretability with performance: in regulated contexts, simpler models or constrained architectures often complement or wrap a neural predictor to meet documentation and audit needs. With thoughtful design and monitoring, neural networks deliver robust value without becoming opaque or ungovernable.

Deep Learning: Scaling Data, Compute, and Representation

Deep learning is a subset of neural networks where depth and capacity are scaled to learn rich representations directly from raw or minimally processed data. Its appeal is that feature extraction becomes part of the training objective: rather than handcrafting features, the model discovers hierarchies that capture shape, texture, tone, syntax, or temporal dynamics. This adaptability has driven advances in perception tasks, language understanding, speech recognition, and multimodal reasoning. The trade-off is that deeper networks typically demand larger datasets, careful regularization, and significant compute.

Training stability is central. Techniques like early stopping, weight decay, dropout, data augmentation, and mixup constrain overfitting and encourage generalization. Optimizers with momentum and adaptive step sizes speed convergence, while warmup and cosine schedules smooth learning rates. Precision management—such as lower-precision arithmetic—can reduce memory footprints and accelerate training when numerically safe. Careful batching, gradient clipping, and checkpointing protect against instabilities and resource limits.

Data strategy is as important as architecture. Self-supervised learning leverages unlabeled data by creating proxy tasks—masking, contrastive pairs, or predictive coding—so models learn useful representations before fine-tuning on smaller labeled sets. Transfer learning allows teams to start from a pretrained model and adapt it to new domains, a pragmatic way to gain accuracy with less annotation. Synthetic data and augmentation diversify input distributions, covering edge cases that are rare but consequential in production. Curating evaluation sets that reflect deployment conditions is essential for honest performance estimates.

Practical constraints deserve early attention:

– Latency: depth and width improve accuracy but may strain real-time requirements at the edge.

– Energy: larger models can increase power use; pruning, distillation, and quantization moderate costs.

– Reliability: ensembles and uncertainty estimates help detect out-of-distribution inputs.

– Safety: fail-safes, confidence thresholds, and human review reduce the risk of overconfident errors.

– Governance: documentation of data lineage, model changes, and known limitations supports audits.

By planning for these constraints, teams avoid surprises when pilots scale to production environments.

Deep learning’s impact becomes durable when it is embedded in full workflows: data pipelines, evaluation gates, deployment targets, and feedback loops for continuous improvement. Rather than a single leap, transformation comes from iterative cycles that tighten the fit between model behavior and operational needs. This steady refinement compounds, raising quality and resilience without overpromising or relying on one-off benchmarks.

Comparing Approaches: When to Use ML, Neural Networks, or Deep Learning

Choosing the right technique is less about fashion and more about aligning constraints with capability. Conventional machine learning shines when data is structured, features are informative, and interpretability is required. Models like linear predictors or tree-based ensembles are light on compute, fast to train, and easy to explain, making them strong candidates for tabular problems such as credit risk, churn, and pricing. Neural networks extend reach to complex patterns and interactions, especially when engineered features are insufficient. Deep learning excels at perception, language, and richly structured signals, provided data scale and compute budget justify the investment.

A practical decision matrix can reduce guesswork:

– Data volume: small to moderate tabular datasets favor conventional ML; large unstructured corpora favor deep learning.

– Latency and footprint: tight real-time or on-device constraints point to compact models or distilled networks.

– Interpretability: regulatory or safety-critical settings lean toward simpler models or hybrid stacks with explainable front ends.

– Lifecycle cost: consider not just training, but monitoring, drift detection, retraining cadence, and labeling pipelines.

– Risk tolerance: high-impact errors may warrant conservative thresholds, human review, and redundancy.

Industry context adds texture. In manufacturing, anomaly detection on sensors may start with unsupervised methods and graduate to sequence models as patterns grow subtle. In logistics, routing and demand forecasting benefit from classical time-series baselines before experimenting with attention-based sequence models. In healthcare operations, triage and capacity planning often succeed with interpretable models, while imaging or waveform analysis may call for deep networks that learn spatial or temporal features. In finance, tabular risk models remain highly effective; natural language workflows like document parsing can gain from pretrained language models fine-tuned for domain terminology.

Operational readiness often decides winners. MLOps practices—automated data validation, reproducible training, model registries, and canary deployments—create a stable runway for whichever technique you select. Monitoring for drift and recalibration maintains performance as behavior, markets, or environments change. Performance should be tracked beyond headline metrics: calibration curves, cost-sensitive scores, and error stratification by subgroup reveal whether gains translate to fair and reliable outcomes. By combining a sober assessment of constraints with rigorous operations, teams can choose an approach that is not only accurate in the lab but also dependable in the field.

Conclusion: Practical Roadmap for Teams Adopting AI

Success with AI in modern industries comes from disciplined steps, not flashy demos. Start by defining the business problem with measurable success criteria—target the decision you aim to improve, the action that will follow a prediction, and the cost of false alarms versus misses. Audit data sources for coverage, quality, and drift risk; document assumptions and expected ranges. Establish baselines using simple models to create a yardstick for value. If a complex approach cannot clear that bar with a meaningful margin, pause and reassess rather than scaling complexity prematurely.

Build a lean, cross-functional team: domain experts to frame signals and constraints; data engineers to move and validate data; ML practitioners to design and train models; software engineers to deploy and monitor; and governance partners to review privacy, fairness, and compliance. Create a delivery cadence that goes from pilot to limited release to scaled deployment, with holdout evaluations at each stage. Bake observability into the product: track input distributions, confidence scores, calibration, and user feedback. Set up retraining triggers based on drift thresholds or business cycle milestones, and keep a rollback plan ready.

Pragmatic techniques help manage cost and risk:

– Leverage transfer learning or small, well-regularized networks when labels are scarce.

– Distill larger models into compact ones for edge or low-latency use.

– Use uncertainty thresholds to route ambiguous cases to humans.

– Document known failure modes and publish limitation notes for stakeholders.

– Align incentives: tie metrics to outcomes users care about, not just model accuracy.

Finally, treat explainability and responsibility as ongoing practices. Offer concise, audience-appropriate explanations for decisions; maintain records of dataset provenance and model changes; and evaluate performance across relevant subgroups to catch disparate impacts early. Clear communication builds trust: when teams share not just scores but also caveats and next steps, adoption increases and surprises decrease. With this roadmap, organizations can apply machine learning, neural networks, and deep learning where they offer real leverage—delivering improvements that endure beyond the pilot phase and translate into resilient, day-to-day value.