Understanding AI Integration in Modern Website Development

Introduction and Outline: Why AI Matters for Websites Now

Modern websites have outgrown their static roots. Today’s experiences adapt to context, interpret intent, and automate repetitive chores that once demanded human attention. Artificial intelligence (AI) sits at the center of this shift, turning clickstreams into signals, content into meaning, and interfaces into conversations. Whether you run a storefront, a knowledge hub, or a community platform, understanding how AI, machine learning (ML), and neural networks connect to real user goals can help you deliver interactions that feel quick, relevant, and considerate—without overpromising or inflating expectations.

To set expectations and give you a map, here is the outline we will follow and then expand in depth with concrete techniques and comparisons:

– Foundations: AI concepts that influence web development choices, from search relevance to personalization and accessibility.

– Machine Learning: Data pipelines, model evaluation, and deployment patterns that work within latency and privacy budgets.

– Neural Networks: How modern architectures power language, vision, and recommendation tasks, plus practical optimization tips.

– Integration Patterns: Client, edge, and server strategies; monitoring; cost management; and responsible use guidelines.

– Conclusion and Roadmap: A stepwise plan your team can adapt in weeks, not months, with measurable checkpoints.

The importance of this topic is grounded in clear constraints. Users reward low latency, transparency, and predictability; teams need measurable wins, maintainable systems, and manageable costs. AI helps when it reliably reduces friction: fewer zero-result searches, faster support flows, tighter content safety, and richer accessibility. But every capability brings trade-offs—data collection vs. privacy, model size vs. responsiveness, personalization vs. fairness—so we will emphasize choices you can justify with metrics. Think of this article as a field guide: honest about limits, concrete about paths forward, and written for practitioners who want progress without hype.

Artificial Intelligence in Web Development: Capabilities, Trade-offs, and Patterns

AI in websites is less about spectacle and more about steady improvements stitched into familiar flows. Search boxes learn synonyms and intent, recommendations surface items that align with context, and content filters reduce exposure to harmful material. A pragmatic lens asks: which user actions repeatedly underperform today, and which AI capability can lift them within acceptable latency and cost? Consider the following areas that frequently deliver value when approached methodically:

– Information retrieval: Reranking search results using semantic signals often improves perceived relevance, especially for ambiguous queries.

– Personalization: On-site behavior can refine sorting and messaging, provided disclosures are clear and opt-outs work as expected.

– Assistance: Task-oriented chat or guided flows can shorten paths for known intents (order status, returns, account updates).

– Accessibility: Captioning, alt-text suggestions, and reading-level adjustments broaden reach and reduce manual effort.

– Safety: Classification models can flag spam, fraud, or policy-violating content before it reaches others.

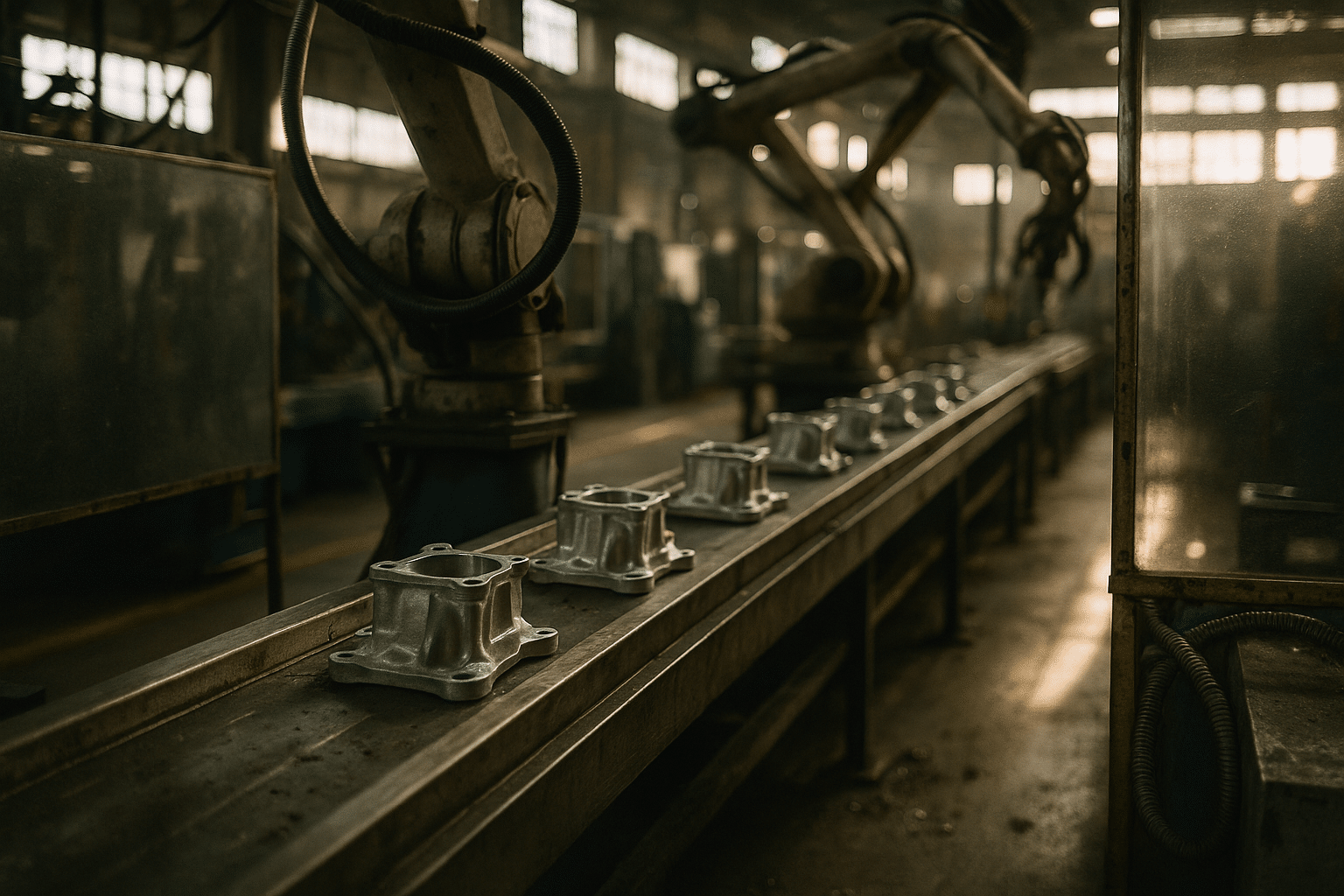

Integration choices start with architecture. Client-side inference removes round trips but must respect device constraints; edge inference reduces latency for common models; server-side inference centralizes control and monitoring. Many teams adopt a hybrid: lightweight checks and caching near the user, heavier reasoning in a controlled environment. Latency budgets are concrete: keeping interactions under roughly 200 ms end-to-end often preserves a sense of immediacy, while progressive rendering or streaming can keep users engaged when tasks need longer.

Compare AI-driven approaches with rules-based systems. Rules are transparent and fast, ideal for stable logic like eligibility or pricing boundaries. AI shines where patterns shift and combinatorial complexity explodes—intent detection, language understanding, and anomaly spotting. A healthy pattern is to start with rules as guardrails and add AI as an advisor: the model proposes, the rules constrain. For example, an intent classifier can route support queries, while deterministic checks enforce authentication and privacy constraints. This layered approach reduces surprises and simplifies audits.

Cost control and maintainability require discipline. Caching repeated queries, batching requests, and throttling low-value traffic can significantly reduce inference calls. Smaller models or quantized variants often achieve acceptable quality while lowering memory and compute needs. Observability completes the picture: track latencies, error rates, and confidence distributions; watch for drift in input patterns; set thresholds that degrade gracefully to rules-based fallbacks. With those safeguards, AI becomes a steady contributor rather than a brittle risk.

Machine Learning Essentials: Data, Evaluation, and Deployment for the Web

Machine learning is the engine that transforms data into predictive behavior, and the web offers abundant signals: search queries, click paths, dwell times, content features, and feedback. Turning that raw material into reliable models follows a repeatable pipeline that favors clarity over complexity. The basic steps are familiar, but small details—like feature stability and annotation quality—often determine outcomes more than exotic algorithms.

– Problem framing: Define a measurable goal (e.g., reduce zero-result searches, increase successful self-service resolutions) and a target metric (e.g., recall@k, click-through, task completion).

– Data collection and labeling: Combine logs, content metadata, and targeted annotations. Even a few hundred high-quality labels can outperform large noisy sets.

– Feature engineering: Normalize text, handle missing values, and create robust signals (e.g., recency, frequency, semantic embeddings). Favor features that are stable over time.

– Splitting strategy: Use time-aware splits to prevent leakage; reserve a holdout set for final checks.

– Model selection: Start with baselines (linear, tree-based) before escalating to heavier architectures; simpler models aid transparency.

– Evaluation: Examine precision, recall, calibration, and subgroup performance to avoid uneven experiences.

– Deployment: Package the model with versioning; define rollback rules; preserve a rules-based fallback path.

Offline metrics are necessary but not sufficient. Real users behave differently from test sets, and content mixes shift with seasons and campaigns. A staged rollout with canaries and A/B testing validates that gains are durable and side effects are minimal. Teams often monitor both outcome metrics (e.g., successful task completions) and health metrics (latency, error rates). If a new model wins on relevance but slows the page, its net impact may be negative; in that case, pruning, quantization, or caching can recover the budget.

Operational excellence keeps ML sustainable. Version datasets alongside models so experiments are reproducible. Document labeling guidelines and measure agreement to catch ambiguity early. Track input distributions to spot drift; if query lengths or languages shift, performance can degrade quietly. Establish a retraining cadence aligned with business cycles—monthly or quarterly is common for content-heavy sites—balancing compute costs against freshness. Finally, align with legal and privacy teams: limit retention, respect consent, and provide visible controls for personalization. When these practices become routine, ML moves from sporadic experiments to predictable delivery.

Neural Networks Demystified: From Intuition to Practical Web Use

Neural networks approximate functions by stacking simple units—neurons—that compute weighted sums and pass them through nonlinear activations. With enough examples, they learn representations that tease out structure in language, images, audio, and behavior. Backpropagation adjusts weights to reduce error, while regularization techniques discourage overfitting. That’s the core story; the practical question for web teams is when these models unlock value that simpler methods cannot.

Convolutional networks excel at spatial patterns, making them a sensible choice for tasks like thumbnail quality checks, logo detection in uploads, or layout analysis for accessibility. Recurrent and attention-based models handle sequences; they parse sentences, summarize product descriptions, or classify user intents. Embeddings turn words, items, and even entire pages into vectors in a common space, enabling semantic search and related-content discovery. In each case, the web use case favors models that produce compact, fast representations suitable for caching and re-ranking.

Trade-offs are unavoidable. Larger networks often deliver higher accuracy but demand more compute and memory; smaller models respond faster and are easier to deploy at the edge. Practical techniques help bridge the gap:

– Quantization: Using lower-precision weights (e.g., 8-bit) can shrink memory usage significantly with modest accuracy impact.

– Distillation: A small “student” model learns to mimic a larger “teacher,” capturing much of its capability at a fraction of the cost.

– Pruning: Removing low-importance connections reduces size and can improve inference speed.

– Caching and reuse: Persist embeddings for popular queries and items to avoid recomputation.

Integration patterns revolve around latency and resilience. For search, a two-stage pipeline is common: a lightweight model narrows candidates, and a heavier model reranks the top results. For assistance, streaming partial outputs keeps users engaged while the model refines the answer. For safety, an ensemble of specialized classifiers can outperform a single general model, especially when policies evolve. Guardrails matter: limit generation to approved sources, apply deterministic filters after model output, and log decisions for audit.

Finally, consider fairness and robustness. Measure performance across languages, devices, and segments to prevent uneven quality. Stress-test with adversarial prompts or edge-case images. Provide clear user controls for personalization and data use. These steps reduce surprises and help ensure neural networks serve all users with consistent care—an essential ingredient for trust in public-facing products.

Conclusion and Practical Roadmap for Web Teams

If you build for the web, AI is a toolkit for reducing friction, not a magic wand. The most reliable progress comes from choosing a narrow, valuable task, proving it with data, and only then expanding the scope. By treating AI, ML, and neural networks as layered components—rules for guardrails, ML for patterns, and neural models for nuanced understanding—you can ship improvements that users feel without destabilizing your stack.

Here is a pragmatic, time-bound roadmap you can adapt:

– Weeks 0–2: Identify a high-leverage use case (e.g., search relevance, content safety). Define success metrics and a latency budget. Gather a labeled sample and establish annotation guidelines.

– Weeks 3–4: Build baselines with simple models and a rules-based fallback. Validate offline; prepare dashboards for latency, errors, and confidence.

– Weeks 5–6: Introduce a compact neural model where it clearly helps (embeddings for semantic search, a classifier for safety). Add quantization or pruning if needed to meet performance targets.

– Weeks 7–8: Run a canary, then an A/B test. Monitor outcomes and subgroup metrics. Document results and decision criteria for rollout or rollback.

Sustainability turns pilots into platforms. Version datasets and models, automate evaluation, and schedule retraining. Budget for inference with caching layers and throttles for low-value traffic. Coordinate with policy and privacy stakeholders to keep data practices clear and respectful. Most importantly, narrate the impact to your organization in concrete terms: faster answers, fewer dead ends, and more accessible content.

AI integration thrives on constraints: a performance ceiling, a cost cap, and well-defined guardrails. Given that frame, even modest models can deliver outstanding gains in clarity and speed. Start focused, measure honestly, and iterate. Your users will notice the smoother path, even if the intelligence remains quietly behind the curtain.